In this blog, we describe three references for interpreting student performance and making decisions. Without a reference, student performance simply cannot be interpreted, and decisions cannot be made. For example, knowing that a student scored 65 is meaningless. This number can gain some value by knowing what measure is used but even then, it’s still difficult to interpret. On the other hand, if a reference is present interpretations and decisions are possible.

- If teachers know that other comparable (grade level) students scored 70 they can interpret this performance (65) as close to (slightly below) other students.

- If teachers know that the student’s score of 65 has increased weekly by about 7 (so last week it was 58 and the week before that was 51, etc.): Improvement is occurring.

- Proficiency, using expert judgment, may be 75, which means the student is near mastery.

In the next section we describe these three references as norm, individual, and criterion, respectively. Importantly, each reference is used for making different decisions.

Benchmark Measurement (Norm-Referenced)

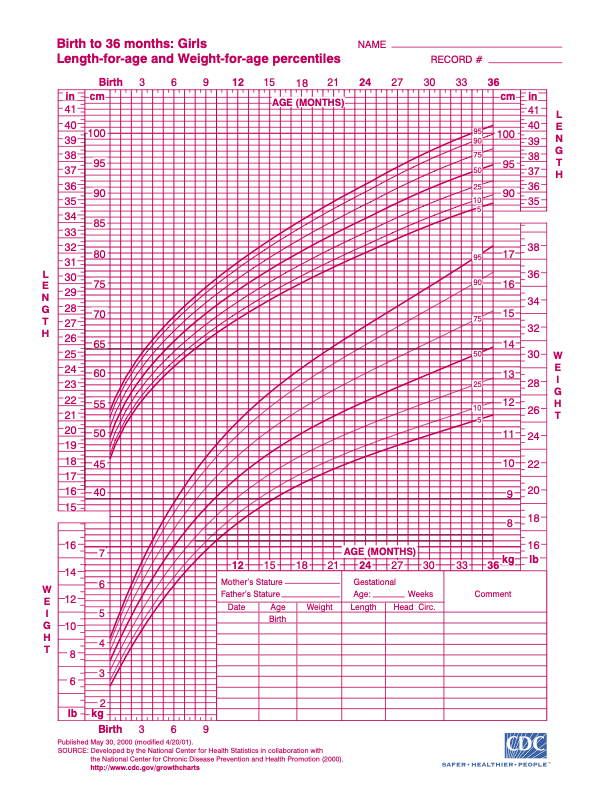

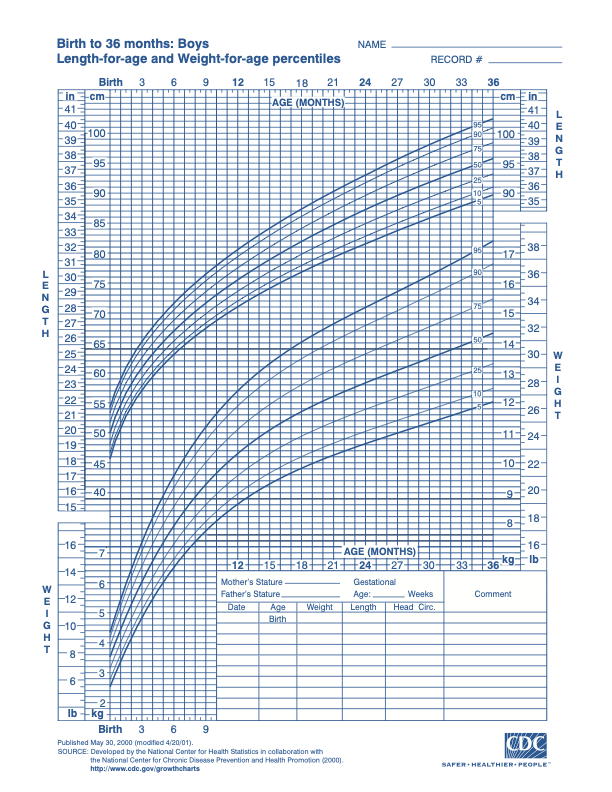

In the early days of curriculum-based measurement (CBM) research, the goal was to develop skill assessments in reading, math, and writing that teachers could use as a health checkup for students, much like that used by the Center for Disease Control (CDC) in determining the length (height) and weight of young children (up to three years old).

|

Girls up to 36-month-old (CDC chart) |

Boys up to 36-month-old (CDC chart) |

|

|

In developing these quick ‘health checks’, the original team at the University of Minnesota spent five years conducting research on a variety of measures that would (a) be brief and easy to administer by teachers and assistants, (b) reflect technically adequacy (reliability and validity) and (c) have alternate measures[1] to view progress over time. And although the initial assessment systems back in the 1980s were developed in local educational agencies (LEAs), eventually more standardized systems were developed and distributed by various vendors. easyCBM® represents a system with these characteristics and is owned by the University of Oregon (UO) and distributed by Riverside Insights. Eventually, normative values were established by researchers at UO to help teachers identify students at risk of learning problems. These normative values are represented by percentile ranks (PRs) for seasonal administrations(early, middle, and end of the school year). They are norm-referenced because a student’s performance is interpreted relative to other students who are at the same grade level.

Important note on percentile ranks (PR). This metric is simply a rank ordering of performance using the median (middle score) as the 50th PR (50% of the students are above and 50% are below). Therefore, the scores can be used to identify the level in which teachers and administrators want to target ‘risk’ (e.g., the performance level where only 25% of the students are below or perhaps only 15% of the students are below which would be the 15th PR. An important caveat: PRs do NOT represent the percent correct, so remember to sound out percentile with the proper ending ‘ile’).

Progress Monitoring Measurement (Individual-Referenced)

This type of measurement in easyCBM has the same characteristics as the benchmark measures (BM), the most important of which is comparable forms so progress over time can be documented. Progress Measures (PMs), however, are different in the grade levels and dates for administration, as they are individualized for the student and later performance of a student is compared to earlier performance from the same student.

- Whereas BMs are administered at grade level (to make norm-referenced comparisons), PMs can (and should) be administered at the grade level the student is performing. For example, if a Grade 3 student is just learning to read (focusing on decoding skills), teachers can sample measures from Grades 1 or 2 to target the skills appropriate for the student to acquire (and reflecting the content of instruction), which may include early word, passage fluency measures, or any of the ‘off grade’ math measures.

- Not only can (should) the grade levels be more individualized with PMs, they also can (should) be administered with enough frequency that teachers can view progress over time and make instructional adjustments accordingly. This frequency is very different than BM administrations being scheduled using fixed grade level passages at three specific date ranges (early, middle, and late in the school year).

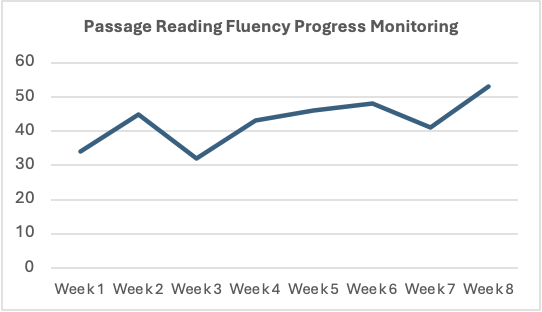

By frequently (e.g., weekly) administering measures appropriate for the student’s grade level, teachers can document progress in three important ways by viewing (a) the slope or rate of change over time, (b) the amount of variation in this slope, and (c) the general level of performance. In the figure, it is possible to see that the student is progressing slightly over eight weeks, shows only slight variation, and is generally performing between 40 to 50 words correct per minute. Once a different intervention is made (usually indicated by a vertical line at the time it is introduced and not connecting scores before and after this line), teachers can see if performance changes coincide with the intervention.

Diagnostic Measurement (Mastery-Referenced)

easyCBM® has this type of measurement available in the item-level reports where teachers can view the ‘Christmas tree’ distributions of items (arranged from the least difficult item at the top and most difficult item at the bottom). Each branch includes the names of students who respond incorrectly. Thus, at the top, only one or two students’ names may be present, and the ‘branch’ extends out only slightly. By the last, most difficult item, many students may respond incorrectly, thus making the branch extend out much more.

This type of measurement is mastery oriented because teachers can target that specific skill for instruction. For example, in reading, the word reading fluency (WRF) measure may indicate that specific word types (e.g., consonant blends) may be difficult for only a few students. In contrast, other word types (e.g., vowel teams) may be quite difficult for most students. In this example, teachers may focus their instruction on consonant blends for the few students reading them incorrectly, but target vowel teams for most of the students.

This measurement system is also available in CBMSkills® (https://cbmskills.com) , a partner assessment system for easyCBM®. This platform includes 12 specific reading and math domains (modules) each organized with similar items that students can take individually on a self-administered digital platform (e.g., using a table or computer). Individual medals are awarded upon mastery at three levels: Bronze, Silver, and Gold.

Summary

easyCBM® allows teachers to reference student performance in three different ways to support different decisions. If teachers want to know about risk, a norm-referenced view is needed, using the same grade level passages administered at the same time to ALL students. If the decision is about progress, then an individual reference is needed: Rather than comparing performance to other students, the student is compared to themselves. Finally, if a diagnostic reference is needed to target specific skill deficits, then specific items need to be analyzed.

[1] To make alternate forms, each form needs to include comparable items over the course of a school year so that performance can be compare over time. Therefore, each form includes items that are preview and review, not yet presented and following their instructional presentations, respectively.